Notifications

On this page:

API

Public API

The following API controllers are defined:

- OrdersController: API for retrieving one or more orders with or without processing details and notification summaries

- EmailNotificationsOrdersController: API for placing new email notification order requests

- EmailNotificationsController: API for retrieving email notifications related to a single order

- SmsNotificationsOrdersController: API for placing new sms notification order requests

- SmsNotificationsController: API for retrieving sms notifications related to a single order

Internal API

The API controllers listed below are exclusively for use within in the Altinn organization:

- Metrics controller API for retrieving metrics over the use of the service

Private API

The API controllers listed below are exclusively for use within the Notification solution:

- Trigger controller: Functionality to trigger the start of order and notifications processing flows.

Database

Data related to notification orders, notifications and recipients is persisted in a PostgreSQL database.

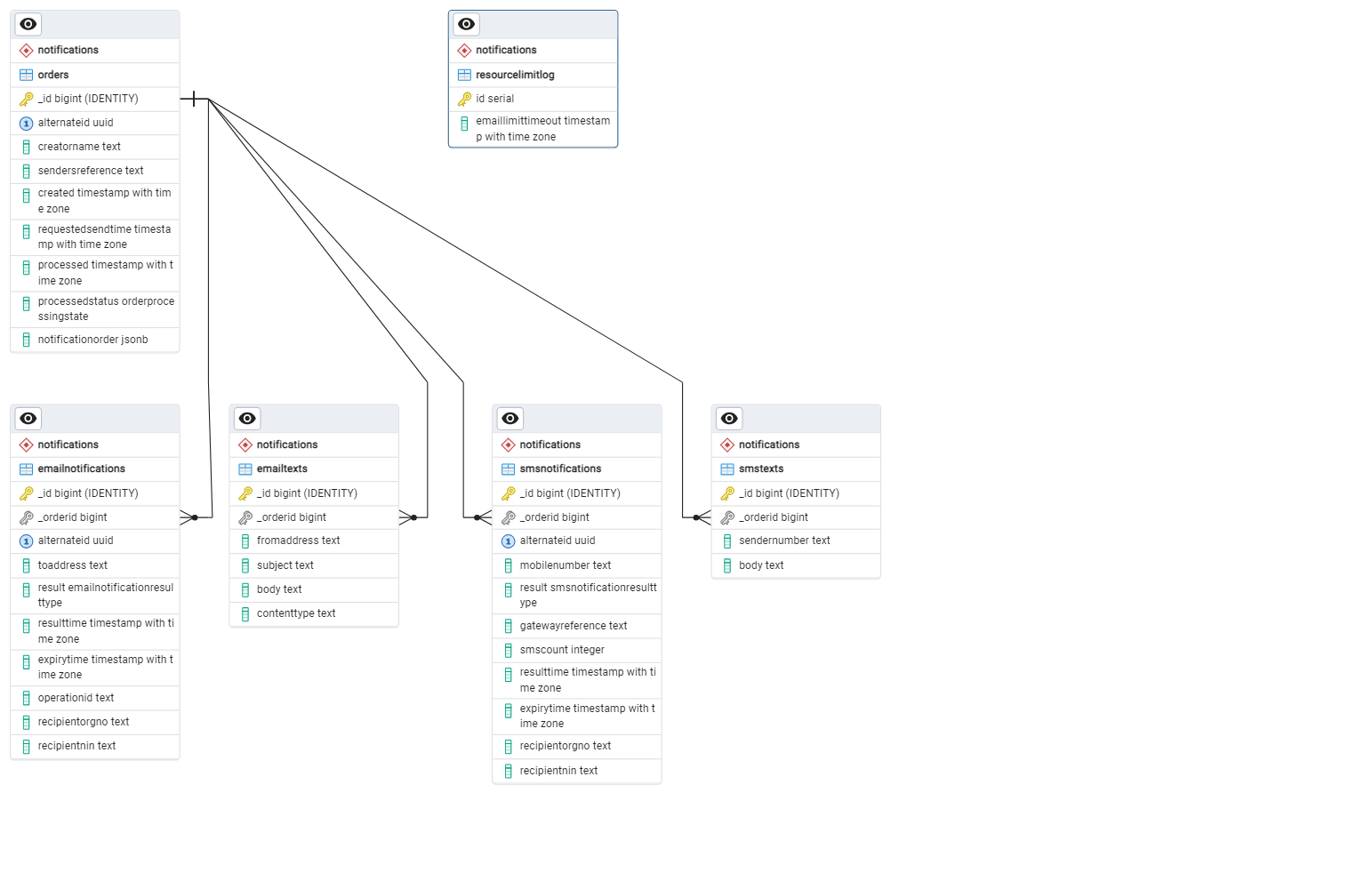

Each table in the notifications schema is described in the table below, followed by a diagram showing the relation between the tables.

| Table | Description |

|---|---|

| orders | Contains metadata for each notification order |

| emailtexts | Holds the static common texts related to an email notification |

| emailnotifications | Holds metadata for each email notification along with recipient contact details |

| smstexts | Holds the static common texts related to an sms notification |

| smsnotifications | Holds metadata for each sms notification along with recipient contact details |

| resourcelimitlog | Keeps track of resource limits outages for dependent systems e.g. Azure Communication services |

Integrations

Kafka

The Notifications microservice has an integration towards a Kafka broker, and this integration is used both to publish and consume messages from topics relevant to the microservice.

Consumers:

The following Kafka consumers are defined:

- AltinnServiceUpdateConsumer: Consumes service updates from other Altinn services

- PastDueOrdersConsumer: Consumes notification orders that are ready to be processed for sending

- PastDueOrdersRetryConsumer: Consumes notification orders where the first attempt of processing has failed

- EmailStatusConsumer: Consumes updates on the send state of an email notification

- SmsStatusConsumer: Consumes updates on the send state of an sms notification

Producers:

A single producer KafkaProducer is implemented and used by all services that publish to Kafka.

Please reference the Kafka architecture section for a closer description of the Kafka setup.

REST clients

Altinn APIs:

The Notification microservice implements multiple API clients for the Altinn API. The clients are used to retrieve recipient data.

- ProfileClient consumes Altinn Profile’s internal API to retrieve or check the existence contact points for recipients.

Cron jobs

Multiple cron jobs have been set up to enable triggering of of actions in the application on a schedule.

The following cron jobs are defined:

| Job name | Schedule | Description |

|---|---|---|

| pending-orders-trigger | */1 * * * * | Sends request to endpoint to start processing of past due orders |

| send-email-trigger | */1 * * * * | Sends request to endpoint to start the process of sending all new email notifications |

| send-sms-trigger | */1 * * * * | Sends request to endpoint to start the process of sending all new sms notifications |

Each cron job runs in a Docker container based of the official docker image for curl and sends a request to an endpoints in the Trigger controller.

The specifications of the cron jobs are hosted in a private repository in Azure DevOps (requires login).

Dependencies

The microservice takes use of a range of external and Altinn services as well as .NET libraries to support the provided functionality. Find descriptions of key dependencies below.

External Services

| Service | Purpose | Resources |

|---|---|---|

| Apache Kafka on Confluent Cloud | Hosts the Kafka broker | Documentation |

| Azure Database for PostgreSQL | Hosts the database | Documentation |

| Azure API Management | Manages access to public API | Documentation |

| Azure Monitor | Telemetry from the application is sent to Application Insights | Documentation |

| Azure Key Vault | Safeguards secrets used by the microservice | Documentation |

| Azure Kubernetes Services (AKS) | Hosts the microservice and cron jobs | Documentation |

Altinn Services

| Service | Purpose | Resources |

|---|---|---|

| Altinn Authorization | Authorizes access to the API | Repository |

| Altinn Notifications Email* | Service for sending emails related to a notification | Repository |

| Altinn Notifications Sms* | Service for sending sms related to a notification | Repository |

| Altinn Profile | Provides contact details for individuals | Repository |

*Functional dependency to enable the full functionality of Altinn Notifications.

.NET Libraries

Notifications microservice takes use of a range of libraries to support the provided functionality.

| Library | Purpose | Resources |

|---|---|---|

| AccessToken | Used to validate tokens in requests | Repository, Documentation |

| Confluent.Kafka | Integrate with kafka broker | Repository, Documentation |

| FluentValidation | Used to validate content of API request | Repository, Documentation |

| JWTCookieAuthentication | Used to validate Altinn token (JWT) | Repository, Documentation |

| libphonenumber-csharp | Used to validate mobile numbers | Repository, Documentation |

| Npgsql | Used to access the database server | Repository, Documentation |

| Yuniql | DB migration | Repository, Documentation |

A full list of NuGet dependencies is available on GitHub.

Testing

Quality gates implemented for a project require an 80 % code coverage for the unit and integration tests combined. xUnit is the framework used and the Moq library supports mocking parts of the solution.

Unit tests

The unit test project is available on GitHub.

Integration tests

The integration test project is available on GitHub.

There are two dependencies for the integration tests:

Kafka server.

A YAML file has been created to easily start all Kafka-related dependencies in a Docker containers.

PostgreSQL database

A PostgreSQL database needs to be installed wherever the tests are running, either in a Docker container or installed on the machine and exposed on port 5432.

A bash script has been set up to easily generate all required roles and rights in the database.

See section on running the application locally if further assistance is required in running the integration tests.

Automated tests

The automated test project is available on GitHub

The automated tests for this micro service are implemented through Grafana’s k6. The tool is specialized for load tests, but we do use it for automated API tests as well. The test set is used for both use case and regression tests.

Use case tests

All use case workflows are available on GitHub

Use case tests are run every 15 minutes through GitHub Actions. The tests run during the use case tests are defined in the k6 test project. The aim of the tests is to run through central functionality of the solution to ensure that it is running and available to our end users.

Regression tests

All regression test workflows are available on GitHub

The regression tests are run once a week and 5 minutes after deploy to a given environment. The tests run during the regression tests are defined in the k6 test project. The aim of the regression tests is to cover as much of our functionality as possible, to ensure that a new release does not break any existing functionality.

Hosting

Web API

The microservice runs in a Docker container hosted in AKS, and it is deployed as a Kubernetes deployment with autoscaling capabilities

The notifications application runs on port 5090.

See DockerFile for details.

Cron jobs

The cron jobs run in a docker containers hosted in AKS, and is started on a schedule configured in the helm chart. There is a policy in place to ensure that there are no concurrent pods of a singular job.

Database

The database is hosted on a PostgreSQL flexible server in Azure.

Build & deploy

Web API

- Build and Code analysis runs in a Github workflow

- Build of the image is done in an Azure Devops Pipeline

- Deploy of the image is enabled with Helm and implemented in an Azure Devops Release pipeline

Cron jobs

- Deploy of the cron jobs is enabled with Helm and implemented in the same pipeline that deploys the web API.

Database

- Migration scripts are copied into the Docker image of the web API when this is build

- Execution of the scripts is on startup of the application and enabled by YUNIQL

Run on local machine

Instructions on how to set up the service on local machine for development or testing is covered by the README in the repository.